Researchers at the Johns Hopkins Applied Physics Laboratory (APL) announced on October 25 that they have developed a mixed reality system that improves the ability to detect social signals and emotional displays in real time by amplifying subtle movements of the face and eyes.

The Mixed Reality Social Prosthesis system emphasizes expressions of emotion by overlaying actual psychophysiological data on the face, enabling finer perception of nonverbal cues that are displayed in the course of social interaction.

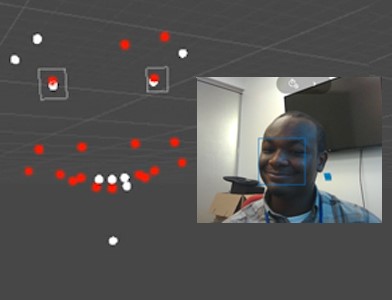

APL researchers developed the Mixed Reality Social Prosthesis system to sense facial actions, pupil size, blink rate, and gaze direction, and to overlay those signals on the face using the HoloLens, Microsoft’s mixed-reality technology platform. “The result is dramatic accentuation of subtle changes in the face, including changes that people are not usually aware of, like pupil dilation or nostril flare,” said Ariel Greenberg, APL research scientist. The novel system collects facial signals using a range of sensors, synchronizes those signals in real time, registers them in real space, and then overlays those signals on the face of an interaction partner. See a video demonstration of the system in action.

The Mixed Reality Social Prosthesis was initially developed by APL researchers for intelligence interviewers and police officers, who could use the system for detecting deception, and to improve skills in de-escalation and conflict resolution. “It can be difficult in the heat of the moment for people to make accurate assessments of others’ emotional state,” said Greenberg. “The Mixed Reality Social Prosthesis could help train officers to recognize and overcome the impact of stress on perception of emotion.”

There are opportunities to apply the system to areas beyond intelligence and law enforcement. As a health care application, the system could help restore function of patients experiencing social deficits as a result of a traumatic brain injury or cognitive decline, or individuals along the autism spectrum.

The Mixed Reality Social Prosthesis could be adapted for clinicians or caretakers who interact with these specialized populations, to assist in interpreting social signals and emotional displays on an individualized basis. “Individuals with social deficits may express emotions in unfamiliar ways,” added Greenberg. “This system could help attune practitioners to patients’ particular patterns of expression.”

Source: JHU APL