From IC Insider Red Hat

In the last discussion of post-quantum cryptography (PQC), we stressed the importance of starting the transition process early to head off the threat posed to our modern IT infrastructure and address the likely challenges that will present themselves. In this discussion, we will present some practical advice for starting that process, grounded in the existing policy directives provided in National Security Memorandum 10 (NSM 10) and General Services Administration Memorandum 23-02 (M-23-02). In particular, we will outline the initial inventory and transition prioritization process steps and how general-purpose enterprise IT automation tools can help tackle this challenge.

These directives lay out the basic process for PQC modernization, particularly in preparing for a long and complex enterprise transition. This is apparent in the stated emphasis on cryptographic agility in the event algorithms are modified or replaced, several initial activities for reporting that continue on an ongoing basis, and a timeline that stretches data collection and reporting over more than a decade – indicating the expectation is that reporting will reveal ongoing efforts over this period. These efforts are focused on identifying High-Value Assets (HVAs) and High Impact Systems, and creating a prioritized inventory for modernization.

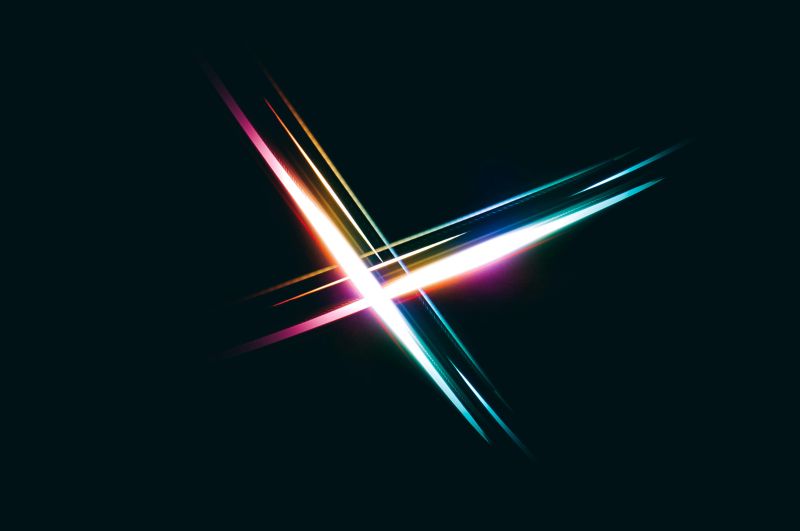

Figure 1: A PQC modernization process focusing on using system automation tools for identifying and managing HVAs and High Impact Systems

To achieve these goals, a straightforward process arises. First, collect relevant data from all systems and cryptographic functions by examination via scanning, probing, inspection, and similar activities. Most organizations lack centralization of records for cryptographic systems, which means we need to check a potentially wide variety of sources. Targets for these activities can and should be extracted from all possible sources, for example:

- Enterprise resource planning (ERP) and management (ERM) tools

- code repositories, CMDB tools, RMF/ATO databases

- Asset managers, shipping/receiving tools, order and vendor management tools

- credentialing and key management, license/key servers

- firewalls/proxies, switches, routers, network management tools (eg, network mappers, SASE, VPNs, etc), direct network scans (nmap, wireshark, ssh)

- SOC / SIEM records, NOC records, power or ILO management

- system/product/vendor documentation, manually defined inventory

Further complicating this step is that the method and techniques used to interact with each possible collection point are not uniform. Examining direct configuration (e.g., the primary operational configuration of an asset), indirect configuration (e.g., connections to other layered components or external dependencies), captured logs and audit records, traffic & protocol captures, API specifications, and serial or other custom interfaces all represent viable routes. Exploiting the full range of possible data sources to build a helpful inventory is needed because many systems will not include such information as explicitly configured cryptographic systems, where mixed cryptographic modes are used for a single asset, parameter details (e.g., key bits, block sizes, etc.), order of algorithm preference, actual system/protocol negotiations, compatibility or upgrade paths, or which supported cryptosystems and protocols might be available for alternative use.

The second major process step is to analyze the collected data to determine the value and impact of each asset or system’s use of cryptography. Due to the data’s scope and breadth, data deduplication and regularization are critical during this phase. Each enterprise must weigh several factors when determining impact, including Cryptography Exposure under both regular and irregular operations, upstream or downstream operations, the diversity of interactions by type and mechanism, the total number of interactions, the system’s longevity, and the system data’s longevity. These factors must also be weighed across several dimensions of potential impact: technical, compliance, and business. The data collection and analysis cycle, per the M-23-2 guidance, will need to be repeated and reported regularly.

The analysis will then feed into each system’s choice of remediation strategy and timeline. The choice of strategy will be informed by the data collected, which will narrow available options for each system by factors such as technical compatibility or the likelihood of disruption of other high value or high impact systems. For example:

- systems with high coupling to other systems will usually be high impact

- low value and low impact systems may be targets for novel remediations or deferred

- higher impact systems may deploy flexible classical and PQC hybrid approaches.

Within the process outlined above, there are key areas where enterprise automation tools can be applied. For this discussion, we will designate Red Hat Ansible Automation Platform as our automation tool of choice and explain here why it is the right choice for:

- The data collection process. Ansible Automation Platform provides native integrations with a wide array of assets – operating systems, firewalls and load balancers, network devices, storage devices, CMDB tools, and others – and can be extended using custom plugins and modules where a native integration doesn’t exist. Using these integrations, asset-specific roles can be created for collecting data from a broad range of potential sources for cryptographic impact analysis without resorting to multiple vendor-specific tools. These roles can encapsulate the details of each asset, likely outside the specialties of inventory and migration staff. A library of roles can be built over time from the most likely and direct assets to collect cryptographic posture information – for example, the target system’s explicit cryptographic configuration data or CMDBs – and later maturing to other ancillary sources. The creation of these roles can be accelerated through the collaborative environment facilitated by Ansible Galaxy – vendors and practitioners can share their open-source tools for inventorying cryptographic usage.

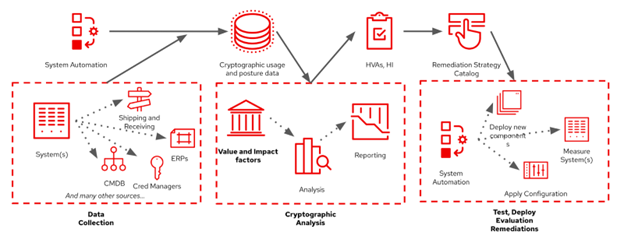

The use of system automation tools for data collection is also ideally suited for security-sensitive enterprises such as the intelligence community, DoD, or critical infrastructure. A scan and probe process is inherently risky since it requires access to a vast number of systems and cryptographic configuration and usage information about these systems can potentially expose weakness and attack vectors. But automation tools like Ansible Automation Platform can dramatically reduce this concern by both decoupling human oriented workflows and the technical integrations or workflows and by ensuring all interactions are logged and auditable. This can allow staff to direct the data collection process without granting direct access to all these data sources or the resulting data capture.

Figure 2: Using an automation platform can allow the data collection process to be managed and directed while preventing operators from accessing potentially sensitive cryptographic posture data.

- Continuous assessment. The playbooks and workflows that the Ansible Automation Platform can encode provide a robust configuration management baseline for the inventory process. This is essential to separate the observed regressions or improvements due to data collection and those due to other causes, such as evolving enterprise infrastructure or tests and experimentation with post-quantum remediations. It is also essential to ensure the assessment process can be repeated to meet annual reporting requirements without overly burdening IT staff. Continuous assessment of an enterprise via automation tools will be necessary to keep changes in the cryptographic posture and capabilities of asset types changes ad is captured in specific ways, such as a Cryptographic Bill of Materials (a CBOM), in sync with the usage of these capabilities as captured in other sources such as a CMDB.

- Test, Deployment, and Evaluation of potential remediations. As your enterprise analyzes its cryptographic posture and identifies potential remediation strategies, Ansible Automation Platform can be used in its more traditional role – applying and managing the technical changes needed to effectuate these strategies such as deploying new cryptographic libraries and functions, reconfiguring systems or networks to allow these, or adding or removing new architectural components for wrapping or proxying affected cryptographic interactions. The complexities of this process, especially where hybrid strategies are used will require a robust, repeatable, and declarative tool to execute. Moreover, in a new crytpo-agile IT environment, this function will become a standard rather than exceptional operational capability. Use of an automation framework like Ansible Automation Platform early in the test and experimentation phase will lay the necessary groundwork for this future operational state.

As we see, an automated approach to post-quantum cryptography migration will be essential for success. Meeting broad enterprise goals for assessing and prioritizing assets and systems and tackling the modernization and transition of these individual systems is enabled with platforms like Red Hat Ansible Automation Platform. For more information on this and other areas Red Hat can offer assistance, visit red.ht/icn.

About IC Insiders

IC Insiders is a special sponsored feature that provides deep-dive analysis, interviews with IC leaders, perspective from industry experts, and more. Learn how your company can become an IC Insider.