Approaching Datacenter Modernization: Enabling Secure, Compliant, Platform as a Product

From IC Insider HashiCorp

By: Mike Wright, Timothy J. Olson

Modern datacenters are essential for mission success and foundational to hybrid cloud adoption. To thrive in the cloud era, organizations are evolving from ITIL-based, operator-driven workflows to dynamic, agile, hybrid platforms. Key to this effort is enterprise datacenter modernization that focuses on enabling automation, integrated workflows, and shared self-service processes. These new dynamic, multi-platform workflows are being adopted to accelerate application delivery, enhance overall security, and increase mission effectiveness. This article will focus on essential and practical approaches to datacenter modernization. For additional information on successful adoption of hybrid cloud, see A Leadership Guide – Multi-Cloud Success for the Intelligence Community.

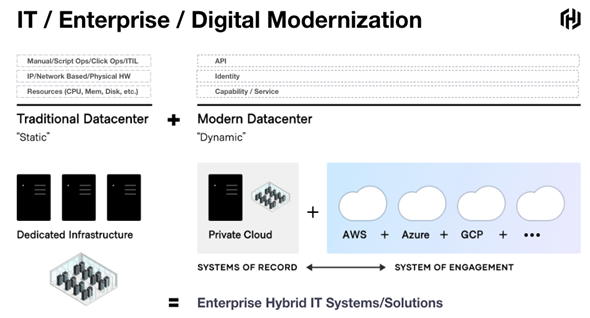

Effective digital transformation and modernization practices mean adopting industrialized workflows—proven, established, systematic processes that produce consistent, repeatable, predictable, reliable outcomes at scale—that can deliver scalable business and mission objectives quickly on demand. An increasingly popular approach to modernizing IT operations for hybrid cloud environments is the Cloud Operating Model, which enables organizations to incorporate a foundational technology stack starting from wherever they are today along the journey to cloud.

Modern datacenters enable hybrid cloud, where success often depends on consistent (referred to as “golden”) workflows that can be reused frequently, reliably, and at scale across multiple environments. This requires:

- Standardization of provisioning workflows (codified, based on instruction sets, enabling automation)

- Identity-based security for zero trust architectures and network connections

- Privileges and rights for applications to be deployed and run compliant and secured

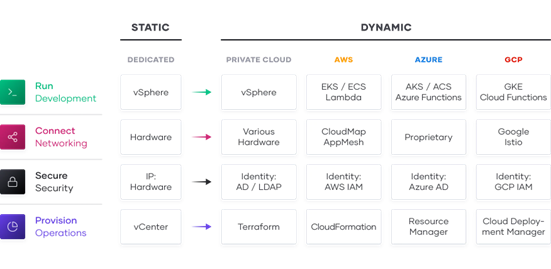

The essential implication and challenge of the transition to the hybrid cloud is the transition from “static” to “dynamic” infrastructure in which resources can be provisioned and run on demand using a new set of IT primitives and control points that are core to datacenter modernization.

While most enterprises began with one cloud provider, hybrid/multi-cloud platforms that offer a wide range of on-demand consumable services and resources are being rapidly adopted as the preferred way to meet varying and increasing mission demands. This approach presents an opportunity for increased speed, efficiency, and scale across “systems of engagement”—applications built to dynamically engage mission users. For most enterprises these systems of engagement must connect to existing “systems of record”—the core mission/business databases and internal applications, which typically reside on enterprise datacenter infrastructure. As a result, enterprises pursue a hybrid solution—a mix of multiple environments under a centralized consumption and management platform.

The challenge for most enterprises is how to deliver these applications rapidly, securely, and with consistency while also ensuring the least possible disruption and friction across the various IT stakeholders and development/mission teams. Compounding this challenge, the underlying primitives have expanded and changed, resulting in disparate, complex, and competing operational models. Datacenter modernization efforts therefore become increasingly important.

Central to today’s datacenter modernization are prolific and widely deployed virtualized workloads. These virtualized workloads contribute to the challenge because of increased demand for new mission/application capabilities, rapid delivery methods, and increased concern around cost, risk, and speed.

Modernizing Virtual Workloads

Datacenter technologies and vendor ecosystems are constantly evolving, presenting ongoing challenges for federal IT managers and decision-makers. These changes encompass a wide spectrum of concerns, including shifting feature requirements, evolving workload and mission needs, heightened security threats, skillset gaps, usage pattern fluctuations, and procurement complexities, all of which can significantly impact licensing and operational costs. Replacing a widely deployed virtual infrastructure hosting thousands—sometimes tens of thousands—of virtual machine (VM) workloads adds further complexity to modernization efforts.

To navigate these challenges effectively, government IT organizations must adopt strategic leverage when considering transformative shifts in core technologies. Many agencies are exploring comprehensive datacenter modernization initiatives that integrate people, processes, and technology. With an agile approach, these efforts can start small, gather insights from initial results, and adapt strategies accordingly to achieve impactful, sustainable modernization.

Some goals that are being considered as part of a datacenter modernization:

- Modernize processes supporting legacy VM workloads

- Enhance cybersecurity posture and visibility

- Increase developer/platform user speed with policy and governance protections

- Enhance protection against future IT concentration risks, vendor lock-in

- Drive down costs associated with core infrastructure (compute, network, and storage)

- Drive down/eliminate process time from remaining ticket based, clickops processes

At HashiCorp we organize these problem domains as Cost, Risk, and Speed.

Though there is increasing use of federally sanctioned public clouds, numerous federal entities still require and utilize government clouds (e.g., C2E), private clouds, and on-prem virtual infrastructure (enterprise datacenters). In many cases these virtual infrastructure deployments are critical to mission success and can be remotely deployed in offices or embassies, military bases, ships, aircraft, and to the mobile tactical edge. Even if existing virtual machine (VM) application workloads could be securely migrated to the cloud using federally approved solutions, practical constraints related to edge computing often undermine their feasibility. Many mission-critical deployments face challenges such as unreliable or nonexistent remote network connectivity, particularly in environments where operational sensitivity or security considerations prevent consistent access. These constraints highlight the need for alternative strategies that address the unique demands of federal edge environments.

Migrating off current virtual infrastructure, such as VMware-centric implementations, is often not feasible, too complex, or fraught with numerous challenges. VMware provides a very mature and reliable hypervisor and virtual infrastructure platform. Teams managing VMware environments are therefore adept at implementing datacenters and edge solutions on top of unquestionably the world’s most widely deployed virtualization technologies. Legacy commercial enterprise software or custom-built applications and data systems have been designed in such a way that achieving high availability, limiting downtime, and recovering from hardware failures rely heavily on the specific features delivered by underlying virtualization tools. Typically, in the case of vSphere, those features may include vSphere HA, DRS, VMotion, backup/restore.

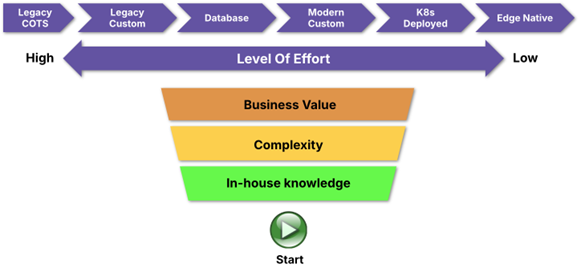

With these datacenter challenges and many more in mind, what are ways to approach the question: “Where to start with datacenter modernization?”

The bottom line: This effort will take time and require skills development. The most common place to start is with the applications (and corresponding infrastructure) or more formally performing a workload portfolio analysis. Typically, a VM estate of workloads spans legacy applications and data systems to include modern containerized applications running in some distribution of Kubernetes deployed as clusters of VMs.

Application/workload portfolio analysis is not something new and it’s possible some organizations can rely on previous work done. However, there are very specific technical characteristics sought when considering a re-platforming/modernization effort that are similar to the application analysis used during the cloud native wave for adopting container-based platforms over the past decade.

Examples of workload business and technical criteria to revisit include:

- Criticality or mission/business value (high, medium, low)

- Investment disposition/costs (maintain, improve, retire, replace)

- Custom built or commercial-off-the-shelf (COTS)

- VM or container or both

- Persistence/stateful framework (RDBMS, filesystem, etc.)

- Infrastructure/OS patching/upgrading process

- Clustered or shared-nothing architecture, horizontally scalable

Consider 2 hypothetical VM based applications deployed to vSphere:

|

Application A |

| No future investment (maintain) |

| Vendor COTS software package |

| Stateful – Relies on VM filesystem for stateful HA |

| Windows 2019 |

| Automated OS patching via Windows Domain Controller, app upgrades done manually. No VM Golden Image generation. |

| App is not clusterable, not horizontally scalable |

|

Application B |

| No future investment (maintain) |

| Custom Built (.NET Framework) |

| Stateless – Relies on backend RDBMs for state |

| Windows 2022 |

| Patched using VM replace, Golden VM images generation |

| App is horizontally scalable, load-balanced user traffic |

VM workloads similar to Application A typically rely more heavily on vSphere-specific features for maintaining High Availability. Workloads similar to Application B do not rely heavily on vSphere DRS, VMotion, HA, VSAN to maintain availability.

The point of this exercise is to highlight the fact that some or many application workloads are candidates to move over to an alternative hypervisor-based platform relatively easily with automation, as may be the case with the Application B example.

Enabling a pragmatic approach:

- Start with low-risk workloads first

- Choose custom built, in house workloads over COTS if possible

- Automate the entire process from OVA/OVF image conversion to scanning/patching to deployment into Test Env on newly selected target infra.

- Build a new repository of “Golden Images”

- Choose stateless over stateful possible

- Develop skills

Improve As You Move

At HashiCorp, we are focused on helping large IT organizations with improvements across cost, risk, and speed. While processes continue to evolve and modernize, there is still much opportunity for improvement and modernization within private datacenters and out to the edge. Making large investments in technology change opens the door to addressing additional areas for improvement. Leadership will want to leverage investment and budget dollars to improve on all three dimensions of cost, risk, and speed. If we only accomplish software cost reduction without lowering risk or increasing speed, we may be missing out on optimizing the entire effort.

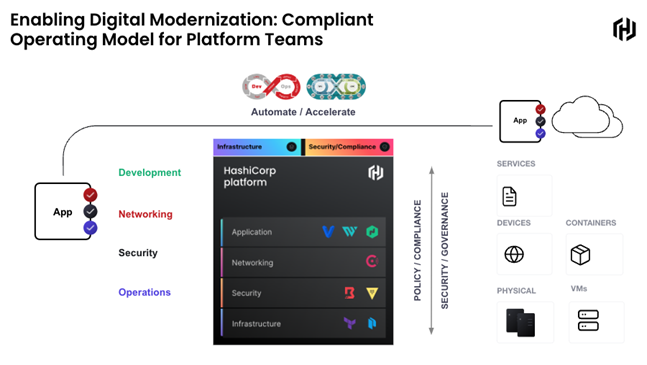

This is where we see the opportunity to apply a Cloud Operating Model to a private cloud estate and adopt a Platform as a Product approach using Platform Team best practices to satisfying user needs.

The Cloud Operating Model emphasizes transitioning from static datacenter resources to supporting more dynamic usage patterns. As seen with public cloud, private cloud operators can deliver on-demand resources when infrastructure is consistently provisioned, secured, connected, and run. For most enterprises, delivering on-demand resources will require a transition in each of those areas.

- Provision. The infrastructure layer transitions from running dedicated servers at limited scale to a dynamic environment where organizations can easily adjust to increased demand by spinning up thousands of servers and scaling them down when not in use.

- The security layer transitions from a fundamentally “high-trust” world enforced by a strong perimeter and firewall to a “low-trust” or “zero-trust” environment with no clear or static perimeter. As a result, the foundational assumption for security shifts from being primarily network-centric to identity-based. This shift can be highly disruptive to traditional security models.

- The networking layer transitions from being heavily dependent on the physical location and IP address of services and applications to using a dynamic registry of services for discovery, segmentation, and composition. An enterprise IT team no longer has the same degree of control over the network or the physical locations of compute resources, and therefore must consider service-based connectivity.

- Run. The runtime layer shifts from deploying artifacts on a static application server to deploying applications with a scheduler atop a pool of infrastructure which is provisioned on-demand. New applications then become collections of services that are dynamically provisioned and packaged in multiple ways: from virtual machines to containers.

The typical journey for unlocking the cloud operating model involves three major milestones:

- Establish hybrid cloud essentials. Key to effective adoption of the hybrid cloud is the immediate requirement for provisioning infrastructure. This is accomplished by adopting infrastructure as code (IaC) and ensuring it is secure with a platform agnostic secrets management solution. These are the bare necessities that will build a scalable, platform-agnostic, and truly dynamic cloud architecture that is future proof.

- Standardize on a set of shared services. As hybrid cloud consumption increases, the need to establish, implement and standardize on a set of shared services is necessary to take full advantage of what the cloud has to offer to maximize value, effectiveness, and efficiency. This also introduces challenges around governance and compliance.

- Innovate using a common logical architecture. Effective adoption of the cloud depends on the use of differing and applicable services and resources across multiple platforms and applications, making the need to create a common, platform-agnostic, logical architecture critical to success. This requires a control plane that connects with the extended ecosystem of cloud solutions and inherently provides advanced security and orchestration across services and multiple clouds.

With this approach, common datacenter modernization activities become much easier and more efficient over time. Everything from physical capacity planning, provisioning, audit, threat detection and response, eliminating resource waste, and enforcing policy can see significant improvements from these elements of a cloud operating model.

Applying Platform as a Product with datacenter modernization initiatives involves a re-inventory of user needs, without giving up any technology imperatives regarding policy, governance, security, and regulations. It’s a user-centric, dynamic approach using principles of product management where a new build can prioritize the capabilities that matter toward improvement (as compared to the previous datacenter generation). Adopting a Platform approach is akin to storing a rackmount server in Git as a codified infrastructure resource (using Infrastructure as Code and applying IaC best practices). It’s an opportunity for private and hybrid cloud platform operators to start small, apply versioned IaC, and iterate over a prioritized list of user stories just as developers do when building business solutions for their users.

HashiCorp Enterprise Solutions enables mission outcomes to be delivered with:

- Platform Standardization

- Automated application and IaC

- Self-service, no vendor lock-in (platform-agnostic)

- Standardized Workflows (IaC-based)

- Efficient, repeatable, predictable, standardized deployments

- Increased accuracy with pre-approved infrastructure

- Accelerated deployments

- Increased developer velocity and app enhancement

- Automated and Consistent Policy Enforcement

- Ensures and enforces compliant deployments (lower risk)

- Minimal remediation required, rapid compliant deployments

- Greatly reduced risk and audit logging

- Automated Integration between Pipeline Components

- Rapid increase in mission application capabilities

- Adoption of DevSecOps and CI/CD methodologies

Conclusion

Datacenter modernization necessitates adopting a multi platform approach that successfully leverages hybrid/multi-cloud. The Cloud Operating Model is an inevitable shift for enterprises aiming to maximize their modernization/digital transformation and mission delivery efforts. The HashiCorp suite of tools seeks to provide solutions for each layer of the platform to enable enterprises to successfully modernize and make this shift and solve complex infrastructure challenges to meet mission needs. Adopting a modern enterprise cloud environment through the common Cloud Operating Model means shifting characteristics of enterprise IT:

- People: Shifting to hybrid cloud skills (addressing the skills gap).

- Enhance skills from internal data center management and single-cloud vendors and apply them consistently in any environment.

- Process: Shifting to self-service IT

- Platform: Position central IT as an enabling, Platform as a Product shared service focused on application delivery velocity

- Tools: Shifting to dynamic environments

- Use tools that support multi platform infrastructure and support critical workflows rather than being tied to specific technologies.

- Provide policy and governance tooling to match the speed of delivery with compliance to manage risk.

Government organizations have an opportunity to establish effective and impactful use of hybrid/multi-cloud environments. HashiCorp and our cloud infrastructure automation tooling are purposefully built to help complex organizations successfully deliver mission outcomes at scale across any platform. Ultimately, these standardized shared services across hybrid/multi-cloud enable an industrialized process for rapid, secure, mission application delivery.

To learn more, please contact HashiCorp Federal at hashicorpfederal@hashicorp.com.

Additional Information:

Whitepaper: Unlocking the Cloud Operating Model

Whitepaper: A Leadership Guide to Multi-Cloud Success for the Intelligence Community

Intelligence Community News Articles by HashiCorp:

- The Multi-Cloud Era is Here

- Getting Started with Zero Trust Security

- Enabling Zero Trust at the Application Layer

- Enabling Zero Trust at the Device/Machine and Human/User Layers

- A Leadership Guide – Multi-Cloud Success for the Intelligence Community

About IC Insiders

IC Insiders is a special sponsored feature that provides deep-dive analysis, interviews with IC leaders, perspective from industry experts, and more. Learn how your company can become an IC Insider.