Infrastructure, Security, and Application Lifecycle Management in the DAF API

From IC Insider HashiCorp

Authors: Dan Fedick, Tim Olson, and Tim Silk

The recently released ‘DAF API Reference Architecture‘ by the DAF CTO (Mr. Jay Bonci) is a significant document. It defines the principles for enabling warfighters, mission partners, and allies to share mission-critical intelligence through an API (Application Programming Interface), where authentication and authorization are necessary for every interaction. Having an API-centric application model allows for a common framework for sharing information between different missions.

In the book “The Kill Chain” by Christian Brose, he speaks of some of the challenges the Department of Defense (DoD) faces when sharing data. The DoD is able to build powerful data collection and enrichment platforms but is not always able to share the information between those platforms. There is a possible security threat when data is shared with partners and allies, so sharing data through APIs can be used as a common protocol that any system can interact with and can be done in a “zero-trust” manner. Sharing data between platforms helps to build a comprehensive understanding of adversaries. The more expansive the information we can share between our mission partners and allies, the faster we can take action against our adversaries and close the kill chain. While sharing information is crucial, identifying allies and mission partners and determining what information should be shared carry significant risks. Inaccurate sharing could have a high mission impact, underscoring the need for careful planning.

The API Reference Architecture lays out the following principles:

- Principle 1. The API solution will establish modern architectures, “Forcing Lifecycle Management.“

- Principle 2. The API solution will be publisher- and developer-friendly

- Principle 3. The API Architecture will be available at all classification levels

- Principle 4. The API solution will have strong API standardization that addresses most use cases

- Principle 5. The API solution will provide an enterprise gateway that assists in the secure scaling of API traffic

- Principle 6. The API solution will make all APIs available through a single SDK framework

- Principle 7. The API Gateway will govern request flow rather than content

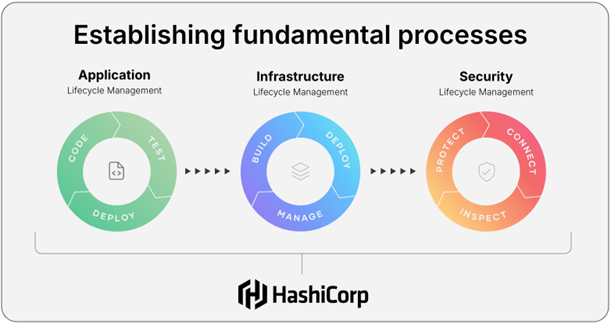

Forcing Lifecycle Management

This first principle is worth highlighting and understanding at a deeper level.

“Lifecycle management” is a business approach that considers the processes of creating, updating, or deleting applications, security, and the underlying infrastructure of those applications. Forcing these systems through automatic Change Control, Continuous Integration, and Continuous Deployment helps to automate complicated and often manual systems. This helps us to reduce risk, increase speed-to-mission, and decrease cost overall. With this understanding in place, let’s now explore these lifecycle attributes and common patterns used in DevOps / DevSecOps environments.

Infrastructure Lifecycle Management (ILM)

Infrastructure such as servers, storage, networks, and load balancers are all necessary to deploy applications on a highly available orchestration platform. There are many ways to deploy infrastructure. Cloud Service Providers (CSP) or even internal private clouds provide a very dynamic environment that can quickly change based on the needs of the mission. Mission-critical platforms must be highly available and able to manage the entire lifecycle of infrastructure, from creation to deployment and upgrades, and eventual decommissioning. The goal is to ensure that infrastructure remains reliable, secure, and cost-effective over time.

Create. Infrastructure planning and creation depend highly on the application’s specific use case. Key considerations include end-user security access, user location, and application performance requirements. Horizontal scaling has become significantly easier today due to advanced auto-scaling policies and the seemingly infinite resource availability in the cloud. The use of cloud products can vary widely based on the application, making it essential to understand the infrastructure cost for effective planning and creation.

Modern CSPs offer billing APIs that allow you to calculate the operational cost of running applications accurately. In contrast, private cloud infrastructure requires navigating capital acquisition lifecycles, making planning for growth over 3-5 years crucial.

The transition to the cloud has allowed us to shift to an API-driven workflow for deploying infrastructure. Instead of physical implementations of servers, storage, and networks, CSP APIs have integrated modern development practices into infrastructure management, allowing us to deploy infrastructure to meet the needs of the mission.

API-driven infrastructure deployments spawned the adoption of Infrastructure as Code (IaC), which has become an excellent way to use the DRY (Don’t Repeat Yourself) method of deploying infrastructure. Common patterns quickly reveal themselves and can then be reused for the deployment, policy, and documentation of systems. For example, in the DoD, the most time-consuming step in implementation is the Authority to Operate (ATO) process. Understanding and codifying the policies validated during system approval can significantly expedite this process.

Update. One of the key benefits of using IaC is the presence of a state file. IaC is written using a declarative language. When the code is applied, a state file is created. The state file is a record of the created resources and their attributes as defined (declared) in the IaC blueprint. IaC tools that rely on state files perform a “diff” (difference) operation when a change occurs, comparing the current state of the infrastructure with the desired state defined in the code. This comparison allows the tool to generate the necessary changes to align the infrastructure with the specified configuration. As a result, you can use the same IaC tool to update the infrastructure without having to write new code for each update, ensuring consistency and minimizing human error.

Policy as Code (PaC) is crucial in the update phase of infrastructure management because it ensures that all changes adhere to predefined governance and compliance standards, including DoD ATO requirements. By codifying policies, organizations embed and enforce policy into the lifecycle of the infrastructure; they cannot be bypassed. Rules and best practices can then be enforced during infrastructure updates, preventing configuration drift and unauthorized modifications. HashiCorp Sentinel (part of Terraform Enterprise) is a powerful policy and compliance language (framework) embedded in the Infrastructure Lifecycle. Other complementary tools like Prisma Cloud, Snyk, and Wiz offer common policy, scanning, and analytic capabilities that can be added as third-party integrations in the pipeline for ILM as well.

All development within the Infrastructure Lifecycle, including Policy as Code and Infrastructure as Code, should be integrated into automation build workflows, such as Continuous Integration/Continuous Deployment (CI/CD). Any changes made in the code repository, whether through branching or directory-based updates, should automatically trigger the deployment of the updated infrastructure.

Destroy. Another significant advantage of using IaC is the ability to destroy all infrastructure based on the defined state. One of the biggest challenges with infrastructure destruction is the risk of leaving behind orphaned resources. Often, this occurs due to the lack of a comprehensive lifecycle process for deletion.

The Gartner Cybersecurity Trends for 2024 report states that shadow IT resources account for 30-40% of IT spending. In large enterprises, shadow IT also poses a significant security risk. Any residual resources, including servers, stale disks, applications, etc. can also introduce security vulnerabilities. Forcing ILM functions by codifying processes and policies helps build a platform on which an application will rely heavily to share data for API-driven automation.

Security Lifecycle Management (SLM)

Designing a comprehensive security lifecycle is essential for safeguarding an organization’s platform. Security measures evolve continuously throughout the person (PE) and non-person entity (NPE) security lifecycle. Onboarding users and applications, rotating credentials, mapping policies, and even deleting accounts must be planned and accounted for. The federal government lays out different security controls like NIST 800-53 Rev 4/5 for information Systems and Organizations and NIST 800-207 for Zero Trust Architecture to help protect, inspect, and connect.

Create. The root of all security is the ability to establish identity. There are many identity providers and capabilities on the market today, including Keycloak, Okta, Ping Federate, AD, AWS OIDC, Azure AD, LDAP, etc. Due to the nature of the cloud and the many ways to authenticate identity, having a central and secure identity broker is critical. Tools like HashiCorp Vault allow organizations to consolidate their many identity providers and integrate them into Vault to allow platform-agnostic access to different systems, data, and services.

HashiCorp Vault maps an identity to a policy that gives access to credentials (secrets, sensitive data such as UN/PW, keys, certs, etc.). Credentials are stored or generated in Vault via “Secrets Engines,” allowing for just-in-time, dynamic credentials for users and machines. Those credentials are stored within a cryptographically sealed FIPS barrier.

Ensuring application security requires additional layers of protection. Applications use software libraries that require scanning and code signing, credentials to secure data, and a network to encrypt during the transmission and storage of data.

Establishing the application’s identity is the essence of security throughout the lifecycle creation process. Some standard identity providers and methods for applications are Kubernetes Authentication, Azure AD, IAM, and LDAP. Onboarding an application involves understanding the application’s underlying orchestration platform, the identity providers and methods it will use, and the different credential types necessary to run it. Tools like HashiCorp Vault help authenticate using established identity providers and then generate policies that allow access to the required credentials.

Update. Good credential managers can store and rotate credentials. One downside of microservice architectures is the number of certificates, passwords, tokens, and keys necessary to run more applications. Having a credential manager to help centralize your network resource credentials and rotate them when required helps in the update process.

As an organization’s requirements change due to mission or other organizational or application changes, the requirements for user and application security policies change. Storing PaC is a great way to document, approve, and test policy changes before deploying them into production.

Though the term “zero-trust” is often overused, extensive work has gone into defining Zero-Trust and its activities. Tools that can collect data, help define necessary policies, and adapt to changes in an environment help protect users, applications, and devices. Monitoring through active telemetry and logging is critical to informing these tools and updating the policies in real-time.

A software inventory of what is running on systems and devices informs administrators of their patch management solution. Knowing the latest security vulnerabilities and how to remediate environments, applications, and devices is crucial to keeping environments secure.

Destroy. A good inventory of applications, devices, and users is critical to ensuring that shadow credentials are not a threat to a system. The DoD ZT Capabilities document states we should have service, device, and user registries to help centrally manage system resources and know what needs to be disabled or removed during the offboarding process of a user or an application. HashiCorp Consul is a good example of a service registry where user service-to-service permissions can be tracked, updated, and monitored. HashiCorp Vault is a centrally managed credential and identity broker, and can be used to centrally manage those enterprise credentials. It’s essential to have a well-managed registry of users, applications, and devices to ensure shadow resources are deleted to decrease the risk of exposed credentials.

Application Lifecycle Management (ALM)

The entire lifecycle of an application—from creation to updates and eventual decommissioning—requires a range of processes and policies to guarantee rapid deployment and mitigate risks within an API. Selecting a VCS, monitoring and logging facilities, deciding the SLO’s (Service Level Objectives), and understanding the user base are essential for effectively managing and enforcing ALM for APIs.

The application is the interface that most mission systems will interact with. It is at the core of “The API” in reference to sharing information between systems. There has been a huge shift from monolith applications to service-driven and microservices applications. This shift has allowed for higher reliability now that we have decomposed large applications. One small function of an application can be modified without the entire system going down. This also allows us to scale different parts of the entire API as needed. In the application lifecycle, we have to think about the creation of new applications as well as the updating and deletion of them.

Create. Most modern languages have HTTP libraries for REST and JSON formatting for standard API calls. Languages like Javascript, Python, Ruby, and Go are popular. A rich set of libraries and frameworks, performance, and unified language for client and server development make them popular.

The cornerstone of building mission applications is storing application code in a VCS for auditing and collaboration control of the codebase. Standard VCS repositories used in disconnected environments are Gitlab Enterprise, Github, and Gitea. AWS CodeCommit, Azure Repos, and Google Cloud Source are other popular VCS offerings.

Many other considerations include choosing backing services, logging, monitoring, testing, and documentation. One common framework for understanding all of the features required when designing an application is “The 12 Factor App.”

Update. The most common container orchestration platform is Kubernetes. Other, simpler platforms like HashiCorp Nomad are also available. Nomad is often used for environments that have traditionally deployed monolith applications directly onto VMs and might want to quickly incorporate and migrate to a more dynamic cloud environment. Nomad adds PaC, embedded into the lifecycle of an application. Not only can it run containerized applications, but Nomad can deploy non-containerized workflows in a highly scalable orchestration system, from the Enterprise to the Edge.

Platforms like Nomad allow you to specify the application’s contract with the system. The contract is an agreement that establishes processing speed, memory, data, network, application location, and policies necessary to run an application. As long as the platform has the required resources, it will ensure the application runs.

Applications admitted into orchestration systems check against a set of policies before admission. Security policies, network policies, resource quotas, RBAC, image security scanning, configuration management, and ensuring logging facilities are all policies checked before an application should run on a system. Kubernetes “admission controllers” can enforce policies like OPA (Open Policy Agent) and Kyverno or even Palo Alto’s Prisma Cloud before admission. These technologies have libraries that align with governance guidelines like NIST-800-53 Rev 4/5 and the Zero Trust guideline NIST-800-207.

In the past, developers would commit months of work and then have a significant change control meeting and merge changes during a maintenance window off-hours. With modern orchestration systems, policies, testing, and deployment models like canary deploys, blue-green deploys, pushing changes, and deploying those changes into environments can sometimes happen many times a day. Microservice architectures allow us to break up the monolith into composable and deployable parts that make this possible. Changes get merged into a branch. Testing is run on that branch, merged, and deployed into development. Then more QA testing is run against the application and eventually is merged into staging and production. These can all be automated tasks through CI/CD tools like Gitlab Runners, Jenkins, Github Enterprise Actions, and Gitea Actions.

Setting SLO’s for applications, such as quantifying the error budget to balance reliability and feature development, is important. In the Site Reliability Engineering book, Google has written extensively about application metrics and SLOs. This platform approach of forcing lifecycle management through CI/CD and automated change control through PaC helps to reduce risk, increase speed, and lower costs.

Destroy. When applications are decommissioned, there are lots of things to think about, such as offboarding users, communicating with stakeholders and users, and data migration. In highly regulated environments, auditing and logging data must be kept for years. Knowing and accommodating that process is critical and should be carefully planned.

Summary

The DAF API Reference Architecture provides valuable insights into the principles of enabling secure data sharing through APIs. The emphasis on forcing Lifecycle Management highlights the importance of considering Infrastructure, Security, and Application Lifecycles when sharing mission-critical intelligence. HashiCorp enables organizations to establish and enforce these fundamental lifecycle management features. By implementing these principles, organizations can enhance their reliability, security, and cost-effectiveness while sharing data with critical mission partners and allies. This allows us to share data, gain understanding, and effectively close the kill chain.

About HashiCorp

HashiCorp is the leader in multi-cloud infrastructure automation software. The HashiCorp software suite enables organizations to adopt consistent workflows to provision, secure, connect, and run any infrastructure for any application. HashiCorp open source tools Vagrant, Packer, Terraform, Vault, Consul, and Nomad are downloaded tens of millions of times each year and are broadly adopted by the Global 2000. Enterprise versions of these products enhance the open source tools with features that promote collaboration, operations, governance, and multi-data center functionality. The company is headquartered in San Francisco and backed by Mayfield, GGV Capital, Redpoint Ventures, True Ventures, IVP, and Bessemer Venture Partners. For more information, visit www.hashicorp.com or follow HashiCorp on Twitter @HashiCorp.

About IC Insiders

IC Insiders is a special sponsored feature that provides deep-dive analysis, interviews with IC leaders, perspective from industry experts, and more. Learn how your company can become an IC Insider.