On March 24, the Defense Advanced Research Projects Agency (DARPA) posted a notice of future artificial intelligence exploration opportunity: In Pixel Intelligent Processing (IP2).

The purpose of this Special Notice (SN) is to provide public notification of additional research areas of interest to the Defense Advanced Research Projects Agency (DARPA), specifically the Artificial Intelligence Exploration (AIE) program. The mission of the Defense Advanced Research Projects Agency (DARPA) is to make strategic, early investments in science and technology that will have long-term positive impact on our Nation’s security. In support of this mission, DARPA has pioneered groundbreaking research and development (R&D) in Artificial Intelligence (AI) for more than five decades. Today, DARPA continues to lead innovation in AI research through a large, diverse portfolio of fundamental and applied R&D AI programs aimed at shaping a future for AI technology where machines may serve as trusted and collaborative partners in solving problems of importance to national security.

The AIE program is one key element of DARPA’s broader AI investment strategy that will help ensure the U.S. maintains a technological advantage in this critical area. Past DARPA AI investments facilitated the advancement of “first wave” (rule based) and “second wave” (statistical learning based) AI technologies. DARPA-funded R&D enabled some of the first successes in AI, such as expert systems and search, and more recently has advanced machine learning algorithms and hardware. DARPA is now interested in researching and developing “third wave” AI theory and applications that address the limitations of first and second wave technologies.

At this time, the DARPA Microsystems Technology Office (MTO) is interested in the following research area to be announced as a potential AIE topic under the Artificial Intelligence Exploration program:

In Pixel Intelligent Processing (IP2)

In the mid-80s, models for visual attention (object tracking) began to adopt biological inspiration to improve accuracy and functionality. In their landmark 1985 paper “Shifts in visual attention: towards the underlying neural circuitry,” Koch and Ullman1 proposed that “primates and humans [have] evolved a specialized processing focus” that can be represented by “simple neuron like elements” and that when enacted in a “winner-take-all” network can explain attention and saliency. Since approximately 2015, trends have shifted towards implementing these models in deep neural networks (NNs) because they greatly outperform conventional heuristic methods in both accuracy and generalizability.2 However, achieving high accuracy in NNs today currently demands power that exceeds that available for sensors at the edge. IP2 will reclaim the accuracy and functionality of deep NNs in power-constrained sensing platforms, with 10x fewer operations compared to state-of-the-art (SOA) NNs.

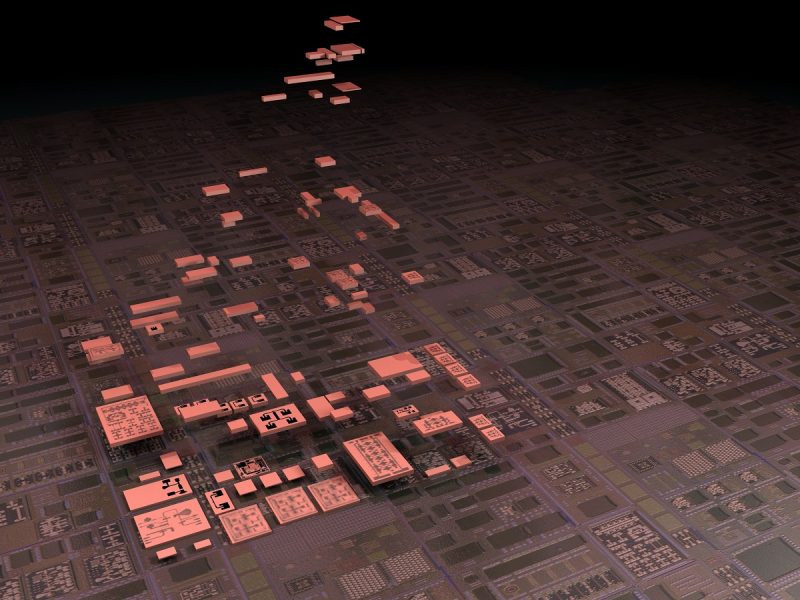

AI processing of video presents a challenging problem because high resolution, high dynamic range, and high frame rates generate significantly more data in real time than other edge sensing modalities. The number of parameters and memory requirement for SOA AI algorithms typically is proportional to the input dimensionality and scales exponentially with the accuracy requirement. To move beyond this paradigm, IP2 will seek to solve two key elements required to embed AI at the sensor edge: data complexity and implementation of accurate, low-latency, low size, weight, and power (SWaP) AI algorithms. First, IP2 will address data complexity by bringing the front end of the neural network into the pixel, reducing dimensionality locally and thereby increasing the sparsity of high-dimensional video data. This “curated” datastream will enable more efficient back end processing without any loss of accuracy. Second, IP2 will develop new, closed-loop, taskoriented predictive recurrent neural network (RNN) algorithms implemented on a field programmable gate array (FPGA), to exploit structure and saliency from the in-pixel neurons produced by the NN front end. The NN front end will identify and pass only salient information into the RNN back end, to enable high-throughput, high accuracy 3rd-wave vision functionality. By immediately moving the data stream to sparse feature representation, reduced complexity NNs will train to high accuracy while reducing overall compute operations by 10x.

IP2 will require performers to demonstrate SOA accuracy with 20x reduction of AI algorithm processing energy delay product (EDP) while processing complex datasets such as UC Berkeley BDD100K. BDD100K is a self-driving car dataset that incorporates geographic, environmental, and weather diversity, intentional occlusions and a large number of classification tasks; this is ideal for demonstrating 3rd-wave functionality for future large format embedded sensors.

Full information is available here.

Source: SAM