From IC Insider Red Hat

In our last discussion, we highlighted the necessity of moving to an intelligence production model that does not rely on or impose the burdens of a multi-domain operations approach enforced by an air-gap. Moving away from this model will be incredibly challenging, but some approaches can be utilized to meet this challenge, and we aim to lay out these potential solutions. As we do so, we will lay out and examine the strengths, weaknesses, and changes to our standard modes of operation that will be required. The goal for each will be to look to the future without constraints of current practices but with an eye to constraining and preventing the same challenges we see today from arising. In this deep dive – we will look at a model that extends the idea of OSINT to the entire analytic process as one means.

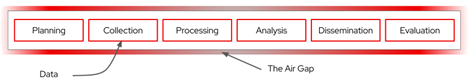

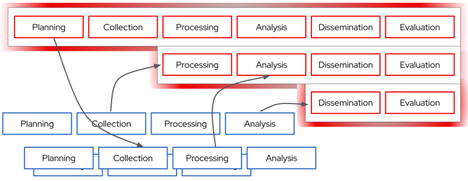

Figure 1: The intelligence production cycle as a chain of activities realized by implemented pipelines

Within the traditional model noted above, the Intelligence Community (IC) effectively owns the entire production process, including all the tools and infrastructure to execute it. This brings significant penalties, and the first is the monetary cost and complexity of the resources needed to constitute this production chain. The IC must take extreme care to ensure the entire chain is trusted. As referenced in Figure 1, these environments are air-gapped, and the infrastructure is tailored for a specific purpose. This is a massive monetary burden to the IC to create and maintain this infrastructure. This model also has huge hidden secondary costs. It reinforces the stovepiped intelligence analysis production process, a siloing that arises for budgetary resources and to enforce the security controls needed to maintain this level of trust. An opportunity cost is borne due to the prevalence of stovepipes. These limit the created intelligence value that could have been realized from sharing their processes, intermediate analytic products, and the impacts of siloed process improvements. With the cost and complexity of each pipeline owning every step in the process, the model also tends to limit the diversity and quantity of production pipelines the IC can manage. Meanwhile, care must be taken with new data collection as each starting point risks interface to the outside world with a direct link to the IC.

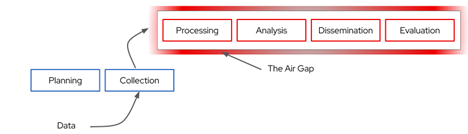

Figure 2: An OSINT-centric approach where early activities are moved outside the IC boundary

We present what we believe is a better way: Don’t rely on the trust inherent in owning the pipeline–which has the benefit of not being responsible for significant pipeline parts. Instead, the IC can rely solely on the data and analysis from commercial and public sources tied to the existing OSINT strategy, as depicted in Figure 2 (above). The OSINT strategy is recognized as a critical growth initiative for the IC but extends to encompass the entire analytical pipeline. The modern value of OSINT is undeniable, but successful adoption requires some diverse sources for this strategy to work, and scaling the model up to analyze all the available OSINT is a task that is only suitable for the entirety of the commercial data production environment.

Figure 3: We suggest extending the fundamental OSINT approach even further

In addition to avoiding the cost and complexity of owning the sensors and analysis chain, the IC can vastly increase its reach and access to non-traditional data sources, which would not be economically feasible to create from scratch or organically. While these sources are commercial, the commercial provider entity is already incentivized to make the products complete and accurate and protect them from adversarial manipulation. These providers also have the tremendous advantage of operating in the open, which enables them to innovate, improve, and expand their capabilities. The IC can influence and foster these commercial providers through its obfuscated product acquisition choices and monetary contributions to the commercial communities. To sustain their business, the commercial entities will continue to fund research and development programs to advance their collection and analytic capabilities. To have a more significant influence on developing new capabilities, the IC needs to use the Red Hat model. Red Hat contributes financially and intellectually to many Open Source Software (OSS) projects to influence their road maps and timelines to include the enterprise features that their customers require. Red Hat has a 30-year history of successfully influencing and supporting the OSS community to support its business needs. Leveraging Red Hat’s OSS model would significantly increase the IC’s influence on commercial and OSINT providers in this new paradigm.

The difference between the existing OSINT model and this proposed extended OSINT model (above Figure 3) is critical. This approach would aim not just to collect and use data derived from open sources but also to collect the products created by the associated analytic tools already exploiting this data for other purposes–usually commercial purposes unrelated to the needs of the IC. The IC would not need to continue directing, tasking, choosing targets, or controlling the data collection process to generate products. Instead, the IC would transition from creator to consumer. As a consumer of the products, it would then be able to exploit the products to aid the decision-makers. This exploitation would undoubtedly remain behind a defensive air-gap, but the nature and scope of the data flow across this boundary would be dramatically altered.

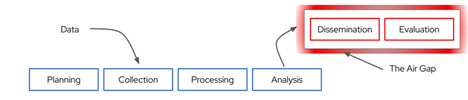

This model has a secondary advantage when a commercial analytic processing chain can be moved quickly to the high-side and protected environments to be reconnected to IC-specific upstream sources. A hybrid approach with the existing production models would benefit from converting analytic processes into encapsulated AI/ML models. The commercial providers would validate these AI/ML models through usage on their production pipelines. The IC could leverage these validated models in the IC production pipelines by connecting them to high-side sensing, collection, and non-OSINT data sources. As seen in Figure 4 (below), the hybrid model allows the IC to take advantage of the low-side pipeline production while continuing to protect sensitive targets and collection sources or capabilities. The use of AI/ML tools, including foundation models, would allow more limited retraining and correction to account for more capable IC-specific collection assets. This allows the IC to capture the innovation speed of the low-side development, initial proof of value, and scaling.

Figure 4: A hybrid approach makes this a more tractable adoption journey and allows flexibility and risk-based decisions about when and where to deploy analytical tools.

This new model gains value and reliability as more and more OSINT is incorporated, where individual anomalies become more apparent, so scaling beyond the IC’s inherent capabilities is essential. By relying on a vast, even maximal, diversity of independent sources and pipelines, analysis is based on the “wisdom of the crowd.” Combining data and higher-order analysis via statistical error correction allows errors to cancel or average out. Monitoring for error anomalies indicates valuable data or true error. With enough redundancy in the analytic process, the IC can simply drop any provider from the ensemble that is anomalous or otherwise unsuitable. This flexibility makes the approach robust and resilient to tampering and manipulation from adversaries.

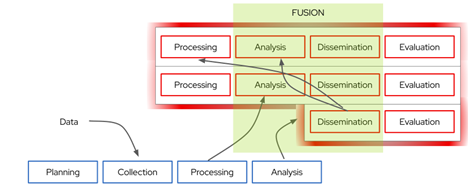

The approach is amenable to data fusion against a large ensemble of analytic products where the IC would refine its focus on this higher-order analysis phase. This is a necessity since with direct control of influence over the type and nature of the OSINT data collection or its analysis, these will likely be more useful and valuable to the IC or decision makers. However, combining and augmenting with other sources and analyses will allow the IC to extract necessary hidden data signals (Figure 5 below).

Figure 5: Data fusion becomes more critical and valuable to the process

The loss of direct control over the fashioning of these analytic chains would require additional care and oversight. For example, legal and policy compliance would require the IC to identify and prevent the exploitation of these pipelines and improper integration of protected data classes such as US persons into the analysis. Fortunately, commercial data analysis is becoming more advanced and sufficiently sophisticated to tailor data, analytical products, and the associated offerings. Newly launched communities such as the AI Alliance, where Red Hat is a founding member, allow the IC to hone in on and address concerns around areas of concern to the IC, such as trustworthiness, legal, and ethical use of AI. In addition, the analytic process, fundamentally a data reduction process- anonymizes most data and sources. An extensive suite of overlapping providers would also grant flexibility to drop those violating these requirements without significantly impacting the IC’s overall capabilities. Nevertheless, this approach may require new clarity on avoiding improper collection–particularly how prohibited targets or information can either be excluded from analysis in late stages or, if necessary if the analysis process sufficiently and irreversibly obfuscates them.

The 2023 DoDIIS conference emphasized the importance of adopting advanced analytical techniques and open-source intelligence and the oncoming wave of doing so. Effective adoption in the IC will require new, agile approaches that acknowledge the modern challenges of a remote workforce and the growing reach and sophistication of the commercial industry to displace traditional IC capabilities. Don’t forget to register for our webinar on January 18th at 11am ET. Click here to sign up.

About the authors:

Michael Epley has been helping the US defense and National Security communities use and adopt open-source software over the last two decades with practical experience as a software developer and enterprise architect. During his tenure at Red Hat, Michael has passionately driven the adoption of key technology: cloud and Kubernetes, tactical edge/forward-deployed systems, data analytics tools and platforms, and disconnected operations — always in the context of security and compliance concerns unique to this sector. Michael has BS in Mathematics and Mechanical Engineering from Virginia Tech and a JD from The University of Texas School of Law.

Michael Epley has been helping the US defense and National Security communities use and adopt open-source software over the last two decades with practical experience as a software developer and enterprise architect. During his tenure at Red Hat, Michael has passionately driven the adoption of key technology: cloud and Kubernetes, tactical edge/forward-deployed systems, data analytics tools and platforms, and disconnected operations — always in the context of security and compliance concerns unique to this sector. Michael has BS in Mathematics and Mechanical Engineering from Virginia Tech and a JD from The University of Texas School of Law.

For the last decade, Austen Bruhn has been developing data/IT architectures and strategies for the U.S. public sector, commercial enterprises, and nonprofit organizations. For the past 4 years, he has been working with the U.S. Department of Defense and the Intelligence Community (IC) to accelerate their mission through the modernization of the applications that power our national security assets. He has worked in various roles as a software engineer, space vehicle systems engineer, and infrastructure engineer and is now leveraging that experience as a solution architect at Red Hat.

For the last decade, Austen Bruhn has been developing data/IT architectures and strategies for the U.S. public sector, commercial enterprises, and nonprofit organizations. For the past 4 years, he has been working with the U.S. Department of Defense and the Intelligence Community (IC) to accelerate their mission through the modernization of the applications that power our national security assets. He has worked in various roles as a software engineer, space vehicle systems engineer, and infrastructure engineer and is now leveraging that experience as a solution architect at Red Hat.

About IC Insiders

IC Insiders is a special sponsored feature that provides deep-dive analysis, interviews with IC leaders, perspective from industry experts, and more. Learn how your company can become an IC Insider.