From IC Insider Clarifai

By Rob Watson, VP of Public Sector Sales, Clarifai

Suppose the intelligence community has become aware that a foreign nation has developed a new type of plane codenamed “D37.” They have gained knowledge of its existence, but now they need to find the location of the aircraft in the world.

The intelligence community has access to on-demand, geospatial imagery, such as Maxar’s G-EGD, which provides visibility to locations worldwide. To review these images quickly and find the D37, AI comes into play to manage this data at scale. Building an AI model, they can use this geospatial imagery and train it to recognize the D37.

Recognizing the D37 will depend on the attributes gathered from the forthcoming intelligence. Depending on the effectiveness of the intelligence gathered, the following three levels of information may exist:

- Distinct characteristics of the target of interest, such as D37 has broader wings than most fighter jets, D37 has dual engines on either side of the fuselage, and large pods mid-wing to carry sensory equipment.

- CAD model of the plane.

- Small dataset of actual D37 plane images labeled and available.

Solving the challenge with synthetic data

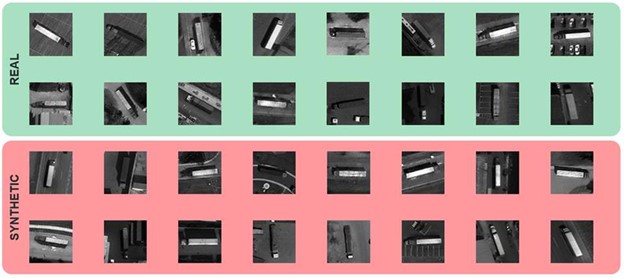

One way to solve this problem is to use synthetic data to generate training data for machine learning models. To this end, Clarifai created trained models that demonstrated incredibly strong performance, even on classes as fine-grained as plane wing shape, despite never having seen a real example of a plane. This effort confirmed the efficacy of synthetic data for training neural networks used in object classification and detection. Below are some examples of synthetic data and how similar they can appear to real data using images of semi-trailer trucks.

Types of learning based on level of data

In our example of the D37 from above, we had three cases split into three categories. We’ll now explore those categories and assign them proper names. Two of these categories – “zero-shot learning” and “few-shot learning” – are commonly used terms in AI.

“Zero-shot learning” is a model that makes predictions where it’s seeing a class it has never seen before. Although we’ve been talking about airplanes in our search for the D37, an easy way to explain zero-shot learning is through familiar, well-known vehicles like cars. For example, given a set of images of automobiles to be classified, as well as text descriptions of different vehicles, an AI which has been trained to recognize cars – but has never seen a taxi – can still recognize a taxi. We just need to let it know that taxis look like yellow cars with signs on the top and letters on the side.

The image below shows a mix of cars, NYC taxis, and Mexico City taxis. The NYC taxis are yellow Ford Crown Victoria sedans with a numbered sign on the top. The Mexico City “Vocho” taxis are green Volkswagen beetles with the words “TAXI” written on the side and on a placard on top. It’s a trivial challenge for a person – even if they’ve never previously seen an NYC or Mexico City taxi – to now identify which images contain which.

“Few-shot learning” means making predictions based on few available samples. This is also very easy for humans – even without describing them, a human could be shown the above image, told which cars are which type of taxi, and then easily identify NYC and Mexico City taxis in other images.

“Half-shot learning” addresses an important middle ground where there is some source of ground truth about the object of interest but no imagery of the object in the domain of interest. In simple terms, this could be a CAD model of an object like a teapot. Although we don’t have any actual photos of it, we can train a model on the rendered teapot and teach it to recognize it.

At Clarifai, we’ve conducted tests of these methods:

Zero-Shot Learning – “Only a description of an object is available”

For example:

“The D37 has wider wings than most fighter jets, it has dual engines on either side of the fuselage, and it has large pods mid-wing to carry sensory equipment.”

In the case where only a description of the plane is available, we achieved robust zero-shot

detection and classification by utilizing fully synthetic datasets that are open-source as well as commercial synthetic data generated systems. Variations of the D37 can be generated and placed into diverse scenarios and settings. Some advantages of this system include accurate, reliable ground truth annotations; the ability to simulate the D37 in diverse scenarios and settings such as switching up the plane’s location, time of day, and position; and the capability to re-generate synthetic data based on new requirements and scenarios.

Half-Shot Learning – “Only a CAD model of an object is available”

This middle ground is where we don’t have any photos of the D37, but we do have a CAD model. Synthetic data generation is uniquely suited to help with this situation. Although it is impossible to collect and label large amounts of real data due to it being unavailable or nonexistent, it is possible to render a CAD model of the object type on multiple backgrounds, train a detector, and run-on large quantities of imagery. With enough synthetic data, machine learning models can be deployed robustly and be leveraged for analysts’ needs.

Few-Shot Learning – “A small set of images available”

Finally, we have the case where there was a limited amount of real labeled data. To address the few-shot domain, Clarifai showed results with both ~1% (1 real image per physical airport) and 10% of the real training dataset of RarePlanes, demonstrating incredibly strong detection performance with few real-world images (>90 Average Precision @ IoU 0.5 with ~1% of the real training set). This clearly shows that a combination of synthetic data and Clarifai’s modeling capabilities result in powerful detectors, even when very little real-world data is available.

Using synthetic data in the manner described above, we can train accurate, reliable machine-learning models that can detect rare objects such as the fictional D37. Due to the sheer quantity of image data, it’s not a feasible idea to throw increasing numbers of people at a project to spot a plane from orbit. Not only would such an effort be nearly impossible to coordinate, but new images would be taken at regular intervals, and humans poring over thousands of images day after day would inevitably miss something. However, it’s absolutely practical to use AI models to accomplish this task, and synthetic data can provide their training, even when information is scarce.

Contact us to have your geo-intelligence empowered by Clarifai.

About Clarifai

Clarifai offers a leading computer vision, NLP, and deep learning AI lifecycle architecture for modeling unstructured image, video, text, and audio data. Our technology helps both public sector organizations solve complex use cases through object classification, detection, tracking, geolocation, visual search, and natural language processing. Clarifai offers on-premise, cloud, bare-metal, and classified deployments.

Clarifai has long served the missions of the U.S. federal government, including the Department of Defense, the Intelligence Community, and Civilian agencies, with state-of-the-art computer vision and natural language processing AI solutions supporting commanders with decision advantage. Use cases include recognizing and tracking threats, detecting objects via aerial and satellite imagery, optimizing equipment maintenance, finding victims in disaster zones, and enhancing security at U.S. government facilities. Clarifai technology is fielded across numerous active mission-critical military deployments.

Founded in 2013 by Matt Zeiler, Ph.D., Clarifai has been a market leader in AI since winning the top five places in image classification at the 2013 ImageNet Challenge. Clarifai was named a leader in Forrester’s New Wave Computer Vision Platforms report, the only startup to receive a differentiated rating. Clarifai is headquartered in Delaware with more than 120 employees and offices in New York City, San Francisco, Washington, D.C., and Tallinn, Estonia. For more information, please visit https://www.clarifai.com/solutions/public-sector-ai.

About IC Insiders

IC Insiders is a special sponsored feature that provides deep-dive analysis, interviews with IC leaders, perspective from industry experts, and more. Learn how your company can become an IC Insider.

Photo credits:

Mexico City Taxis:

jm3 on Flickr under CC Attribution-ShareAlike 2.0 Generic

Steve Cadman on Flickr under CC Attribution-ShareAlike 2.0 Generic

NYC Taxis:

Cars:

Rendered Teapot:

Dhatfield on Wikimedia under CC Attribution-Share Alike 3.0 Unported